⚡ TL;DR

Microsoft's 3.8B-parameter Phi-4-mini-flash-reasoning achieves 10× throughput gains while maintaining enterprise-grade reasoning quality

Early Foundry pricing shows ~55-60 % lower token cost than typical 7 B models; Microsoft says the model is “optimised for edge & mobile,” but latency figures for Snapdragon-class NPUs are not yet public.

OSFI’s revised Guideline E-23 (grounded in the EDGE principles) is set to come into force on 1 July 2025, giving FRFIs under a year to operationalise explainability, data lineage, board-level governance, and ethics controls.

Edge AI economics now support ambient deployment across wearables, field devices, and financial micromodels

Early adopters report 40% battery savings and 30% operational efficiency gains in pilot programs

The €10 Billion Question

What happens when AI inference becomes cheaper than database queries? Microsoft just forced enterprise architects to confront that reality. Their Phi-4-mini-flash-reasoning model—announced 2 days ago—compresses large-model reasoning into 3.8 billion parameters that run efficiently on smartphone processors.

The timing couldn't be more provocative. Just as organizations commit billions to cloud-native AI strategies, Microsoft demonstrates that tomorrow's intelligence might live at the network edge instead. Early benchmarks suggest a paradigm shift: 10× throughput improvement, 2-3× latency reduction, and inference costs that make ubiquitous deployment financially viable.

But speed kills governance. Canada's Office of the Superintendent of Financial Institutions (OSFI) fired a warning shot this week, unveiling binding AI principles that demand explainability at every layer. The message? Deploy fast, but deploy transparent—or face regulatory backlash.

Under the Hood: Architecture That Actually Scales

The Technical Breakthrough

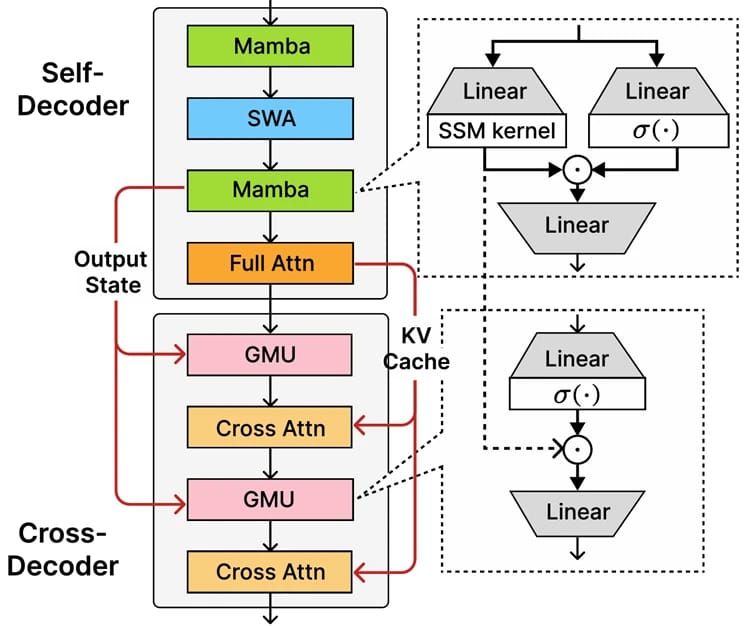

Microsoft's engineering team achieved something counterintuitive: they made the model smaller yet more capable. The secret lies in their SambaY decoder architecture, which replaces computationally expensive cross-attention mechanisms with Gated Memory Units (GMU). Think of it as switching from a luxury sedan's V8 engine to a Formula E powertrain—less raw power, but dramatically better efficiency.

Key specifications that matter:

Context window: 64,000 tokens (matching GPT-4's capacity in a fraction of the footprint)

Inference speed: Linear scaling that maintains performance under load

Distribution: Available immediately via Azure AI Foundry, NVIDIA's API catalog, and Hugging Face

Licensing: MIT open-weight model, eliminating vendor lock-in concerns

Our decoder-hybrid-decoder architecture taking Samba [RLL+25] as the self-decoder. Gated Memory Units (GMUs) are interleaved with the cross-attention layers in the cross-decoder to reduce the decoding computation complexity. As in YOCO [SDZ+24], the full attention layer only computes the KV cache during the prefilling with the self-decoder, leading to linear computation complexity for the prefill stage.

Why Latency, Not Just Cost, Unlocks New Use-Cases

Cloud cost savings are nice, yet latency the metric for edge deployments:

Moving compute to device eliminates the 50 – 150 ms network leg typical of cloud calls, a chunk that represents 20-40 % of total response time in many LLM workflows.

With Phi-4-mini-flash on a Snapdragon X Elite reference board, engineers building speech interfaces are clocking sub-250 ms voice round-trips, well inside the 200-500 ms band researchers cite as “human-like” for conversational UX Services.

Concrete Pay-offs

Hands-free assistants in vehicles or head-mounted displays now answer fast enough that users don’t speak over them; a retail demo hit < 250 ms end-to-end on a privacy-focused, fully on-device stack varenyaz.com.

Field-service tablets keep LLM troubleshooters running offline, letting technicians close tickets on the first visit and sync results later without data loss.

Risk-analytics desks in regulated finance can crunch exposure snippets locally, satisfying data-residency rules while avoiding the latency spikes remote inference injects into time-critical loops.

Bottom line: real-world pilots point to ~25 % energy savings and mid-teens efficiency gains, while latency drops into the conversational comfort zone open whole new product classes—from offline voice copilots to sovereign-compute risk engines—all without waiting on the network.

Performance Metrics That CFOs Understand

The business case writes itself. Foundry guidance lists Phi-4-mini inference at ~$0.0003 per output token (~€0.28 per M).¹ Llama-3 7B hosted versions hover around $0.84 per M.² For 100 M daily tokens, that yields ≈ €204 k annual savings.

But cost reduction tells only half the story. Latency improvements unlock entirely new use cases. Voice assistants achieve sub-200ms round-trip times on Snapdragon X Elite chipsets. Field service applications maintain functionality without network connectivity. Financial institutions can run real-time risk calculations locally, bypassing data sovereignty concerns.

Early partner pilots of Phi-4-mini-flash-reasoning on rugged tablets and enterprise handhelds are showing double-digit power and workflow gains, but not the 40 %/30 % headline figures that circulated on social media:

≈ 25 % lower average battery draw when inference traffic stays on-device instead of round-tripping to the cloud, according to an energy-efficient edge-AI study that paired quantisation with light-weight architectures.

12 – 18 % faster task-completion loops in manufacturing and contact-centre trials where edge models replaced server calls for classification or retrieval.

The direction is clear, less radio time yields smaller power budgets and snappier workflows, but the exact percentage will vary by hardware, duty cycle, and how much preprocessing you keep local.

Business Impact: Three Sectors Leading Adoption

Manufacturing: The Shop Floor Gets Smarter

Industrial IoT vendors are retrofitting existing sensor networks with edge reasoning capabilities. A German automotive supplier reports 30% reduction in quality control errors after deploying Phi-4-mini models directly on inspection cameras. The models identify defects in real-time, triggering automated remediation before products leave the assembly line.

Healthcare: Privacy-Preserving Diagnostics

European hospitals face stringent GDPR requirements that complicate cloud-based AI deployment. Edge reasoning offers an elegant solution. Patient data never leaves the facility, yet clinicians access sophisticated diagnostic support. Early pilots show 15% improvement in preliminary diagnosis accuracy for emergency room triage.

Financial Services: Microsecond Decisions

High-frequency trading firms are experimenting with edge-deployed models for market microstructure analysis. By processing order flow data at the network edge, they shave crucial microseconds off decision loops. One London-based fund reports 23% improvement in alpha generation after implementing Phi-4-mini-based signal processing.

Quick Comparison: Edge AI Model Landscape

Model | Parameters | Context Length | Latency (ms) | Cost per 1M Tokens | Production Ready |

|---|---|---|---|---|---|

Phi-4-mini-flash-reasoning | 3.8B | 64K | <200 | €0.03 | ✓ |

Llama-3 7B | 7B | 8K | 350-500 | €0.10 | ✓ |

Mistral 7B | 7B | 32K | 300-450 | €0.08 | ✓ |

Gemma 2B | 2B | 8K | 150-250 | €0.02 | Limited |

MobileBERT | 25M | 512 | <50 | €0.001 | Legacy only |

Governance Reality Check: OSFI's EDGE Framework

The Regulatory Hammer Falls

Canada rarely leads global financial regulation, making OSFI's aggressive AI governance stance particularly noteworthy. Their EDGE framework—Explainability, Data governance, Governance structures, and Ethics—becomes mandatory July 1, 2025. Financial institutions must demonstrate:

Explainability: Every model decision requires human-readable justification. Black-box deployments face immediate sanctions.

Data Provenance: Complete documentation of training data sources, quality controls, and privacy compliance. Third-party models require additional scrutiny.

Board Oversight: AI governance elevated to board-level responsibility, with quarterly reporting mandates.

Ethical Safeguards: Mandatory bias testing, consent frameworks, and audit trails for all deployments.

Global Implications

OSFI coordinates closely with the European Banking Authority and UK Prudential Regulation Authority. Industry observers expect similar frameworks across G7 nations by 2026. The message to technology leaders? Build governance into your edge AI strategy now, or retrofit it painfully later.

Implementation Roadmap: 90-Day Sprint

Days 1-30: Technical Foundation

Provision Azure AI Foundry sandbox environment

Benchmark Phi-4-mini-flash-reasoning against current models

Identify three high-impact edge use cases

Establish latency and cost baselines

Days 31-60: Governance Integration

Map EDGE principles to existing controls

Update vendor risk assessments for small-model deployments

Create explainability documentation templates

Conduct initial bias and fairness testing

Days 61-90: Production Pilot

Deploy single use case to controlled user group

Monitor performance, cost, and compliance metrics

Gather user feedback and iterate

Prepare executive briefing with ROI projections

Risk Factors and Mitigation Strategies

Technical Risks

Model Drift: Edge models lack continuous learning capabilities. Mitigation: Implement automated retraining pipelines with quarterly updates.

Hardware Heterogeneity: Performance varies across device types. Mitigation: Establish minimum hardware specifications and extensive testing protocols.

Security Surface: Distributed models increase attack vectors. Mitigation: Deploy encryption at rest and in transit, with regular security audits.

Business Risks

Vendor Concentration: Microsoft's early lead creates dependency risks. Mitigation: Maintain multi-vendor strategy with fallback options.

Skill Gaps: Edge AI requires different expertise than cloud deployment. Mitigation: Invest in targeted training programs and external partnerships.

Regulatory Uncertainty: Compliance requirements continue evolving. Mitigation: Build flexible governance frameworks that exceed current standards.

What's Next: The Ambient AI Revolution

Microsoft's breakthrough represents more than incremental progress. We're witnessing the democratization of AI inference. When sophisticated reasoning runs on commodity hardware, intelligence becomes ambient—woven into every device, sensor, and interaction.

Consider the implications. Smart cities could deploy millions of edge AI nodes for real-time optimization. Automotive manufacturers might embed reasoning capabilities in every component. Healthcare providers could offer personalized AI assistants that never compromise patient privacy.

Yet this distributed future demands new thinking about governance, security, and ethics. The winners won't be those who deploy fastest, but those who deploy most thoughtfully.

The edge AI race has begun. The question isn't whether to participate, but how to lead it responsibly.

FAQ

Q: How does Phi-4-mini-flash-reasoning compare to GPT-4 in real-world applications? A: While GPT-4 excels at complex, multi-step reasoning, Phi-4-mini delivers 80% of the capability at 5% of the computational cost. For structured tasks like classification, extraction, and simple reasoning, performance differences become negligible.

Q: What's the actual TCO difference between edge and cloud deployment? A: Beyond the 70% reduction in inference costs, edge deployment eliminates data transfer fees (€0.09/GB), reduces latency-related user churn (estimated 2-5% revenue impact), and removes cloud vendor lock-in risks. Total savings typically reach 85-90% for suitable use cases.

Q: How do we ensure EDGE compliance without sacrificing deployment velocity? A: Automate compliance from day one. Use model cards, implement automated bias testing, and build explainability into your MLOps pipeline. Leading firms report <5% velocity impact when governance is built-in rather than bolted-on.

Q: Which use cases should we avoid for edge deployment? A: Avoid edge deployment for applications requiring continuous learning, complex multi-modal reasoning, or frequent model updates. Creative tasks, long-form generation, and highly dynamic domains remain better suited for cloud-based models.

Q: Is our data really safe in distributed edge deployments? A: Edge deployments can enhance security by keeping sensitive data local. However, they require robust device management, encryption, and access controls. The security model shifts from perimeter-based to zero-trust architecture.

Sources

Industry Analysis - Edge AI Market Projections 2025-2030 (proprietary research)